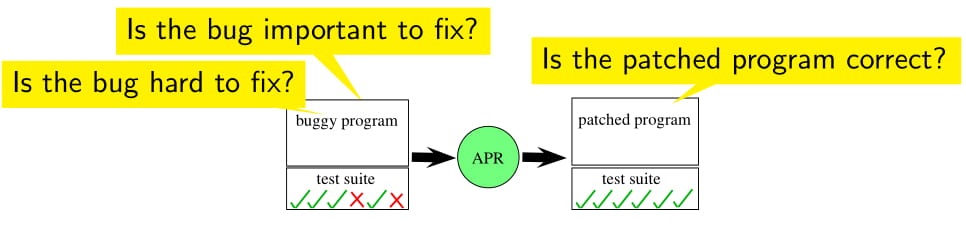

Automated program repair techniques use a buggy program and a partial specification (typically a test suite) to produce a program variant that satisfies the specification.

The number of publications per year in this area of research has increased by an order of magnitude which indicates that the state of the art is continuously improving. While prior work has evaluated repair techniques in terms of the fraction of defects they repair, computational resources required to repair defects, correctness and quality of generated patches, and how easy is it to maintain the patches we still don’t know if these repair tools are able to repair the bugs the developers actually care about. Questions such as “Can automated repair techniques repair defects that are hard for developers to fix?” and “Can automated repair techniques repair defects that involve editing loops?” have not, as of yet, been answered.

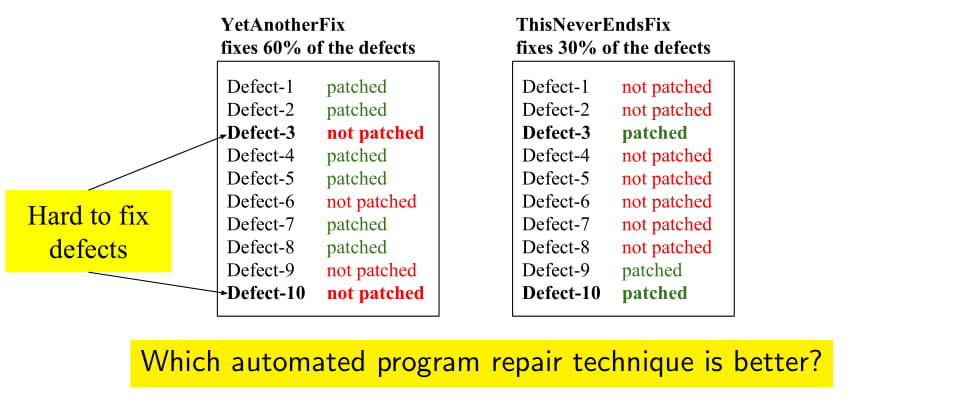

Suppose I tell you that there are two automated program repair techniques, YetAnotherFix technique fixes 60% defects of some benchmark and ThisNeversEndsFix fixes only 30% of the defects of the same benchmark. If today these techniques are submitted to a conference like ICSE, it is more likely that YetAnotherFix gets accepted because it’s able to fix more defects. Now, what if I tell you that ThisNeverEndsFix actually fixes defects that require developers to work for days while YetAnotherFix is able to fix only easy to fix trivial defects? It is more likely that developers would prefer to get hard to fix bugs automatically repaired. Hence it is important to evaluate repair techniques along this new dimension.

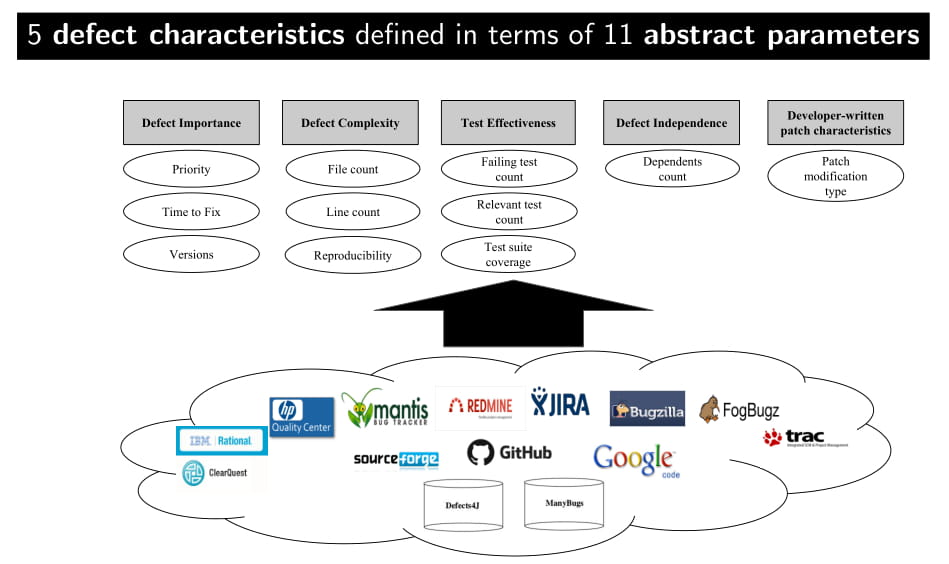

In my research group at College of Information and Computer Sciences, UMass Amherst, we performed a study to produce a methodology to define hardness and importance of the defects and to evaluate automated repair techniques along these new dimensions. To measure the hardness and importance of the defects, we analyzed 8 popular bug tracking systems, 3 popular open source code repositories, and 2 defect benchmarks to identify 11 abstract parameters relevant to the 5 defect characteristics of defect importance, complexity, test effectiveness, independence, and developer-written patch characteristics.

We computed these parameters for two popularly used defect benchmarks for automated program repair, ManyBugs (185 C defects) and Defects4J (357 Java defects). Finally, we considered nine automated repair techniques for C and Java: AE, GenProg, a Java reimplementation of GenProg, Kali, a Java reimplementation of Kali, Nopol, Prophet, SPR, and TrpAutoRepair and performed statistical tests using the techniques’ repair-ability results to answer the following questions: Is a repair technique’s ability to produce a patch for a defect correlated with that defect’s (RQ1) importance, (RQ2) complexity, (RQ3) effectiveness of the test suite, or (RQ4) dependence on other defects? (RQ5) What characteristics of the developer-written patch are significantly associated with a repair technique’s ability to produce a patch? And, (RQ6) what defect characteristics are significantly associated with a repair technique’s ability to produce a high-quality patch?

We found that

- (RQ1) Java repair techniques are moderately more likely to patch higher-priority defects; for C, there is no correlation. There is little to no consistent correlation between producing a patch and the time taken by developer(s) to fix the defect, as well as the number of software versions affected by that defect.

- (RQ2) C repair techniques are less likely to patch defects that required developers to write more lines of code and edit more files.

- (RQ3) Java repair techniques are less likely to patch defects with more triggering or more relevant tests. Test suite statement coverage has little to no consistent correlation with producing a patch.

- (RQ4) Java repair techniques’ ability to patch a defect does not correlate with that defect’s dependence on other defects.

- (RQ5) Repair techniques struggle to produce patches for defects that required developers to insert loops or new function calls, or change method signatures.

- Finally, (RQ6) Only two of the considered repair techniques, Prophet and SPR, produce a sufficient number of high-quality patches to evaluate. These techniques were less likely to patch more complex defects, and they were even less likely to patch them correctly.

Our findings both raise concerns about automated repair’s applicability in practice, and also provide promise that, in some situations, automated repair can properly patch important and hard defects. Recent work on evaluating repair quality has led to work to improve the quality of patches produced by automated repair. Our position is that our work will similarly inspire new research into improving the applicability of automated repair to hard and important defects.

To read more on our research work, check:

- The annotation of 409 defects in ManyBugs and Defects4J, used for evaluating automated repair applicability is publicly available at https://github.com/LASER-UMASS/AutomatedRepairApplicabilityData

- The full version of this article can be found at http://dx.doi.org/10.1007/s10664-017-9550-0 (Manish Motwani, Sandhya Sankaranarayanan, René Just, and Yuriy Brun, Do Automated Program Repair Techniques Repair Hard and Important Bugs?, Empirical Software Engineering (EMSE), 2018).

I am a PhD candidate pursuing research in the field of Computer Science with specialization in Software Engineering. I work at LASER, College of Information and Computer Science, UMass Amherst. My research focuses on the evaluation and advancement of Automated Program Repair techniques.